- #INSTALL APACHE SPARK ON MAC HOW TO#

- #INSTALL APACHE SPARK ON MAC INSTALL#

- #INSTALL APACHE SPARK ON MAC DRIVER#

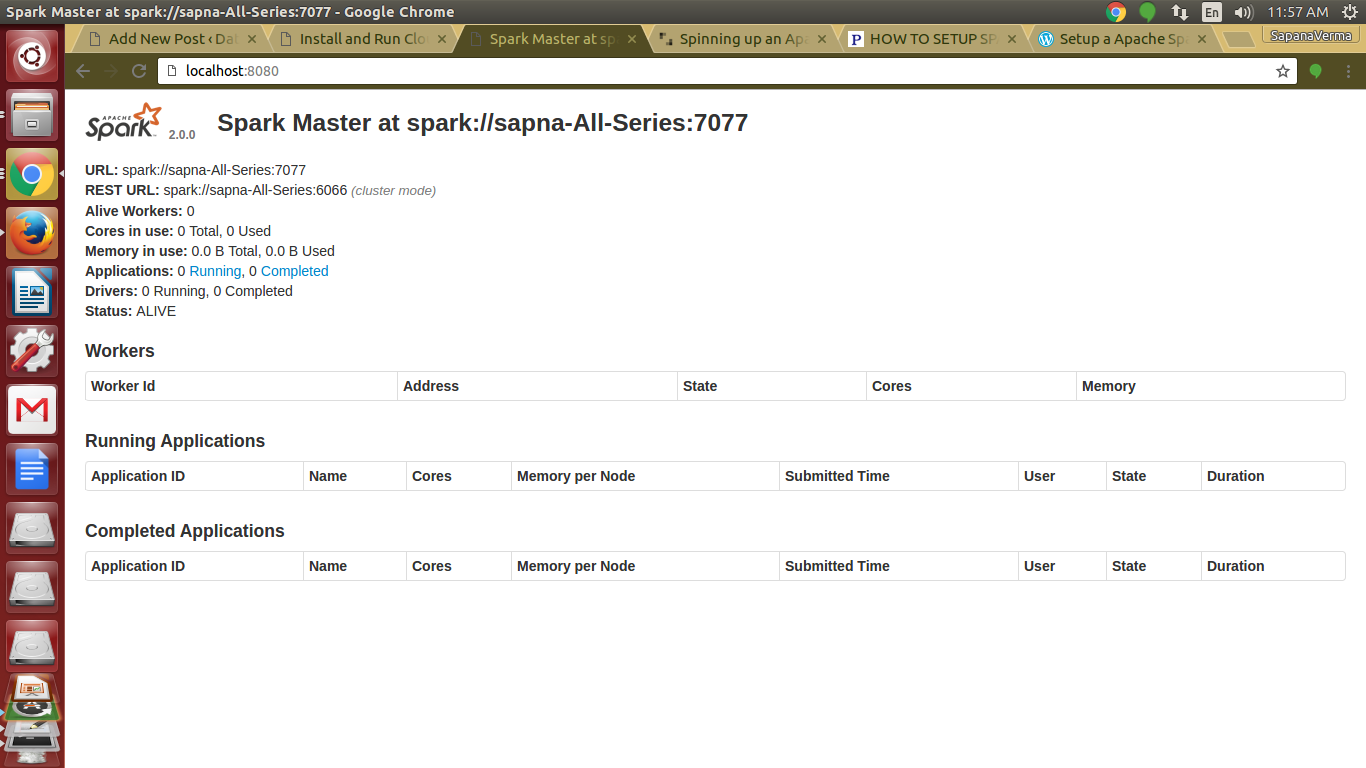

However, when execution is finished, the Web UI is dismissed with the application driver and can no longer be accessed. When you submit a job, Spark Driver automatically starts a web UI on port 4040 that displays information about the application. To run the same application in cluster mode, replace -deploy-mode clientwith -deploy-mode cluster. The first parameter, -deploy-mode, specifies which mode to use, client or cluster. To run the sample Pi calculation, use the following command: spark-submit -deploy-mode client \ The Spark installation package contains sample applications, like the parallel calculation of Pi, that you can run to practice starting Spark jobs.

#INSTALL APACHE SPARK ON MAC HOW TO#

512m How to Submit a Spark Application to the YARN ClusterĪpplications are submitted with the spark-submit command.

Set the default amount of memory allocated to Spark Driver in cluster mode via (this value defaults to 1G). The amount of memory requested by Spark at initialization is configured either in nf, or through the command line. In cluster mode, the Spark Driver runs inside YARN Application Master. Configure the Spark Driver Memory Allocation in Cluster Mode If your settings are lower, adjust the samples with your configuration. This guide will use a sample value of 1536 for -allocation-mb. Make sure that values for Spark memory allocation, configured in the following section, are below the maximum. This is the maximum allowed value, in MB, for a single container. Get the value of -allocation-mb in $HADOOP_CONF_DIR/yarn-site.xml. If the memory requested is above the maximum allowed, YARN will reject creation of the container, and your Spark application won’t start. Give Your YARN Containers Maximum Allowed Memory

#INSTALL APACHE SPARK ON MAC INSTALL#

Install and Configure a 3-Node Hadoop Cluster guide for more details on managing your YARN cluster’s memory. For nodes with less than 4G RAM, the default configuration is not adequate and may trigger swapping and poor performance, or even the failure of application initialization due to lack of memory.īe sure to understand how Hadoop YARN manages memory allocation before editing Spark memory settings so that your changes are compatible with your YARN cluster’s limits.

Configure Memory AllocationĪllocation of Spark containers to run in YARN containers may fail if memory allocation is not configured properly. For long running jobs, cluster mode is more appropriate. Spark Executors still run on the cluster, and to schedule everything, a small YARN Application Master is created.Ĭlient mode is well suited for interactive jobs, but applications will fail if the client stops. If the client is shut down, the job fails. In this mode, the Spark Driver is encapsulated inside the YARN Application Master.Ĭlient mode the Spark driver runs on a client, such as your laptop. You can start a job from your laptop and the job will continue running even if you close your computer. Understanding the difference between the two modes is important for choosing an appropriate memory allocation configuration, and to submit jobs as expected.Ī Spark job consists of two parts: Spark Executors that run the actual tasks, and a Spark Driver that schedules the Executors.Ĭluster mode: everything runs inside the cluster. Spark jobs can run on YARN in two modes: cluster mode and client mode. Spark is now ready to interact with your YARN cluster.

0 kommentar(er)

0 kommentar(er)